The Java Microbenchmark Harness (JMH) is a tool that allows measuring various code performance characteristics and identifying bottlenecks with precision – up to the method level. This Java tutorial will get you familiar with JMH, creating and running benchmarks with examples.

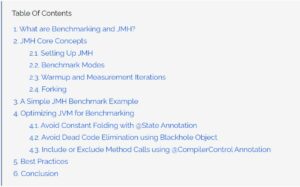

1. What are Benchmarking and JMH?

A benchmark is a standard or point of reference against which things may be compared or assessed. In programming, benchmarking is referred to as comparing the performance code segments. So micro-benchmarking is even more focused on comparing the performance of smaller code units in an application, which is generally the method.

Microbenchmarking is an excellent way of testing out the ability of a particular coding section to perform in an ideal runtime setting. These are the most suitable code segments such as algorithms. Note that a microbenchmark is expected not to communicate with external systems or make any type of input/output calls. It just ensures that when we combine multiple such microbenchmark code units in the application, we will get the maximum results optimally.

Many other Java libraries and tools exist to perform large-scale application-level optimizations. JMH focuses on small-scale optimizations at individual methods level under a specific runtime (compiler + memory) setting. It helps in understanding how the method is performing on typical processors and memory units.

Remember that microbenchmarks (ideally) discard the compiler optimizations so their results must be combined with the results of other profiler tools to make correct conclusions.

2. JMH Core Concepts

Before going deep further into the concept, let us understand the basic usage and key terms in JMH-based microbenchmarking.

2.1. Setting Up JMH

Although there are many possible ways to integrate and use JMH, the recommended way is to create a new Maven or Gradle application for only microbenchmarking purposes. The project should have all the dependencies included in the real application to keep both projects almost similar.

After creating the new project, add the JMH’s jmh-core and jmh-generator-and process dependencies into build file.

<dependency>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-core</artifactId>

<version>1.35</version>

</dependency>

<dependency>

<groupId>org.openjdk.jmh</groupId>

<artifactId>jmh-generator-annprocess</artifactId>

<version>1.35</version>

</dependency>Adding the annotation processor dependency clearly indicates that JMH is based on annotations to configure benchmarking behavior. The @Benchmark annotation is the entry point for creating any benchmark. We can create as many benchmarks in a class as we like.

@Benchmark

public void someMethod() {

//code

}To execute all benchmarks, we need to start the process using org.openjdk.jmh.Main.main() method.

public class ApplicationBenchmarks {

public static void main(String[] args) throws Exception {

org.openjdk.jmh.Main.main(args);

}

@Benchmark

public void someMethod() {

//code

}

}After the JMH process finishes, we can see the performance numbers in the console something like this:

Benchmark Mode Cnt Score Error Units

ApplicationBenchmarks.someMethod thrpt 25 2670644438.470 ± 121534763.138 ops/s2.2. Benchmark Modes

Although there are multiple benchmark modes in JMH, they essentially measure the performance in terms of throughput and execution time.

- Throughput Mode: measures the number of method invocations that can be completed within a single unit of time. It is more like load testing of the method and produces the results in terms of successful and failed operations. A large number in the result indicates that the operations are running at a fast pace.

- Time-based Modes: test out the time it takes for the single invocation of the method. These are useful for comparing the performance of different methods of doing the same thing. It helps in concluding which logic faster performs the task. The followings are the time-based modes available in JMH.

- Average Time: measures the average time it takes for the single execution of the benchmark method to execute.

- Sample Time: measures the total time it takes for the benchmark method to execute, including minimum and maximum execution time for a single invocation.

- Single Shot Time: runs a single iteration of the compared methods without warming up the JVM. It is useful for quickly making decisions in development time.

Use the @BenchmarkMode annotation to specify the preferred mode.

@Benchmark

@BenchmarkMode(Mode.Throughput)

public void someMethod() {

//code

}2.3. Warmup and Measurement Iterations

When we start the JVM and load the application, JVM loads all the classes into the JVM cache. When we first time perform an action on the application, JVM lazy loads the classes according to what’s required to process the request. Once the JVM has loaded all the necessary classes, all subsequent similar actions use the same classes loaded previously. For this reason, we notice that the first request to a recently started server always takes more time than other requests.

In microbenchmarking, when we are solely focused on the code units’ performance, we must discard such optimization by the JVM. So, JMH executes the microbenchmark a few times before starting to capture the performance. This makes sure that JVM is fully optimized and resembles an ideal setup for an application. This phase is called the JVM warmup.

We can configure the number of times a benchmark should be executed to warm up the JVM using @warmup annotation. The default warmup iteration value is 5.

@Benchmark

@BenchmarkMode(Mode.Throughput)

@Warmup(iterations = 5, time = 100, timeUnit = TimeUnit.MILLISECONDS)

public void someMethod() {

//code

}After the JVM is warmed up, we can execute the method in performance measurement mode using the @Measurement annotation.

@Benchmark

@BenchmarkMode(Mode.Throughput)

@Warmup(iterations = 5, time = 100, timeUnit = TimeUnit.MILLISECONDS)

@Measurement(iterations = 5, time = 100, timeUnit = TimeUnit.MILLISECONDS)

public void someMethod() {

//code

}2.4. Forking

The realtime applications do not run in a single thread. They use multiple threads to serve incoming requests. Forking helps simulate the number of threads that should be used to measure the benchmark’s performance.

In the following example, JVM can be forked into 5 independent processes, and each fork will be warmed up for 2 iterations before measuring the performance.

@Fork(value = 5, warmups = 2)It also allows us to add Java options to the java command line, for example:

@Fork(value = 5, warmups = 2, jvmArgs = {"-Xms2G", "-Xmx2G"})The

@Warmup,@Measurement,@BenchmarkModeand@Forkannotations can be applied only on a specific method, or at the enclosing class instance to have the effect over all benchmark methods in the class.

3. A Simple JMH Benchmark Example

The following ListComparisonBenchmarks class compares the performance of ArrayList and LinkedList by inserting 1 Million strings and then fetching all 1 Million strings from the list.

import java.util.ArrayList;

import java.util.LinkedList;

import java.util.concurrent.TimeUnit;

import org.openjdk.jmh.annotations.Benchmark;

import org.openjdk.jmh.annotations.BenchmarkMode;

import org.openjdk.jmh.annotations.Measurement;

import org.openjdk.jmh.annotations.Mode;

import org.openjdk.jmh.annotations.Scope;

import org.openjdk.jmh.annotations.State;

import org.openjdk.jmh.annotations.Warmup;

import org.openjdk.jmh.infra.Blackhole;

@BenchmarkMode(Mode.Throughput)

@Warmup(iterations = 3, time = 10, timeUnit = TimeUnit.MILLISECONDS)

@Measurement(iterations = 3, time = 10, timeUnit = TimeUnit.MILLISECONDS)

public class ApplicationBenchmarks {

public static void main(String[] args) throws Exception {

org.openjdk.jmh.Main.main(args);

}

@State(Scope.Benchmark)

public static class Params {

public int listSize = 10_000_000; //1M

public double b = 1;

}

@Benchmark

public static void addSelectUsingArrayList(Params param, Blackhole blackhole) {

ArrayList<String> arrayList = new ArrayList<>();

for (int i = 0; i < param.listSize; i++) {

arrayList.add("prefix_" + i);

}

for (int i = 0; i < param.listSize; i++) {

blackhole.consume(arrayList.get(i));

}

}

@Benchmark

public static void addSelectUsingLinkedList(Params param, Blackhole blackhole) {

LinkedList<String> linkedList = new LinkedList<>();

for (int i = 0; i < param.listSize; i++) {

linkedList.add("prefix_" + i);

}

for (int i = 0; i < param.listSize; i++) {

blackhole.consume(linkedList.get(i));

}

}

}4. Optimizing JVM for Benchmarking

Although benchmarks seem easy to configure and create, but they are not largely due to internal optimizations done by the JVM. To truly measure the performance of a method, we must cancel such JVM optimizations.

4.1. Avoid Constant Folding with @State Annotation

One of the mistakes in creating benchmarks is using the constant values of variables in computations. This can be simple inline variable declaration and initialization in the same line. When we do computations on such constant values, then the result of the computation is also constant everytime. JVM is smart enough to identify such calls, skip the computations altogether, and directly use the computed value.

For example, in the following method we add two constant values:

public static double add() {

double a = 1;

double b = 1;

return a + b;

}JVM will identify the pattern and change the add operation to a constant value. This is called constant folding.

public static double add() {

return 2;

}We can solve the constant folding problem using the @State annotated parameter classes. State objects are usually injected into benchmark methods as arguments, and JMH takes care of their instantiation and sharing, thus avoiding constant folding.

@State(Scope.Benchmark)

public static class Params {

public double a = 1;

public double b = 1;

}

@Benchmark

public static double add(Params params) {

return params.a + params.b;

}4.2. Avoid Dead Code Elimination using Blackhole Object

The JIT compiler is intelligent enough to identify redundant statements that do not contribute to the computed value of the benchmark method. The compiler eliminates all such statements when generating the bytecode. This is called dead code elimination.

For example, in the following method ‘new Object()‘ statement is redundant and does nothing. JIT compiler identifies this statement and removes from the compiled bytecode.

@Benchmark

public static double add(Params params) {

new Object();

return params.a + params.b;

}Although, we may not want to put such dead code in our benchmark but something we want to mimic the real application where such objects will be created for a purpose, and we want to measure their impact in the benchmarked method.

Blackhole object is an excellent way to force the compiler to compile this statement and generate the bytecode for it. The Blackhole object “consumes” the values, conceiving no information to JIT whether the value is actually used afterward.

@Benchmark

public static double add(Params params, Blackhole blackhole) {

blackhole.consume(new Object());

return params.a + params.b;

}4.3. Include or Exclude Method Calls using @CompilerControl Annotation

Another useful annotation @CompilerControl may be used to affect the compilation of particular methods in the benchmarks. This annotation tells the compiler to compile, inline (or not), and exclude (or not) a particular method from the code.

In the following example, we are asking the compiler to inline the method during the compilation. It helps in the compilation/inlining of other methods that affect the performance of the benchmark.

@Benchmark

public static double add(Params params, Blackhole blackhole) {

return params.a + params.b + someOtherMethod();

}

@CompilerControl(CompilerControl.Mode.INLINE)

private double someOtherMethod(Params params){

return params.a * params.b;

}5. Best Practices

After understanding the nitty-gritty of JMH, let us go through some best practices which will help in minimizing the mistakes:

- In most cases, the best person to write a benchmark is the programmer who is writing the actual method in the application. When multiple people are involved in writing the actual code and benchmarks, there is always a chance of misinterpretation.

- Another good suggestion is to use the same class structure in the real application. The hierarchy of classes and interfaces matters a lot in the case of micro-optimizations.

- Be mindful of the runtime environment, such as configured memory in the machine and memory used for running the benchmarks. Check out the memory size and disk type (conventional/SSD). They perform differently and give different results. Ideally, benchmarks should be run with a very strict and defined machine configuration to have consistent results all the time.

6. Conclusion

In this JMH tutorial, we learned how JMH is used to measure the performance of Java methods in different modes. We learned the core terminology used in JMH and how to use different annotations. We also learned to avoid unwanted optimizations by JVM and JIT compilers while generating and executing the bytecode of the benchmark method.

Use microbenchmarks for creating performance documentation for important code segments in your application. This is especially true for library creators and programmers writing complex algorithms.

Happy Learning !!

Comments