In the context of AGI and LLMs, prompts are the specific inputs supplied to the model to generate specific outputs. How we phrase and structure these input prompts greatly influences the model’s responses. Initially, when LLMs were introduced, the prompts were simple strings. You ask a simple question, and the model gives its answer. Later as the models evolved, their capabilities to differentiate between a question and an instruction have also increased.

Spring AI PromptTemplate provides higher levels of abstraction for these messages to LLMs. Let’s learn to craft different types of prompts with examples.

String promptText = """

Tell about {topic} to a {age} old person.

""";

//Get from user

String topic = "Black Hole";

int age = 14;

PromptTemplate promptTemplate = new PromptTemplate(promptText);

Message message = promptTemplate.createMessage(Map.of("topic", topic, "age", age));

Prompt prompt = new Prompt(List.of(message, message2, ...));

Generation generation = chatModel.call(prompt).getResult();1. Prerequisite

Before running the examples in this article, make sure you have correctly setup the OpenAI API key in the environment setup and referred to in the application.properties file.

For detailed instructions, refer to the Getting Started with Spring AI guide.

Additionally, you have included the necessary dependencies into pom.xml or build.gradle file, as applicable.

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>dependencies {

implementation 'org.springframework.ai:spring-ai-openai-spring-boot-starter'

}2. Different Types of Roles in a Conversation with AI

In the context of a prompt, roles help define the perspective from which different entities interact or contribute. The roles ensure that the right actions are taken at each step of a conversation with LLM.

In a simple conversation, generally, there are only two roles involved i.e. User and Assistant. The user asks the questions, and the assistant provides the answers. But in complex and controlled conversations, there are more roles involved.

| ROLE | API/Class | Description |

|---|---|---|

| USER | UserMessage | (You) ask questions or provide input. |

| ASSISTANT | AssistantMessage | LLM provided answer and guidance based on user questions. |

| SYSTEM | SystemMessage | Handles the technical aspects of conversation. These are instructions to the LLM before initiating a conversation. It controls how the LLM interprets and replies to the user message. OpenAI has some excellent examples of system prompts. |

| FUNCTION | FunctionMessage | Specific tasks or operations that contribute to the final response from the LLM. Mostly used for performing tasks that LLM cannot do, such as invoking external APIs to fetch real-time data or performing complex scientific calculations. |

3. Prompt API

At the top level of the hierarchy, the request and response to the LLM are represented by two interfaces ModelRequest and ModelResponse. As the names suggest, ModelRequest represents the request sent to the AI model and ModelResponse represents the received response.

These interfaces are implemented by conversation-specific classes that represent that particular conversation style such as text chat, audio, or image.

| Interface | Implementing Classes |

|---|---|

| ModelRequest | AudioTranscriptionPrompt, EmbeddingRequest, ImagePrompt, Prompt |

| ModelResponse | AudioTranscriptionResponse, ChatResponse, EmbeddingResponse, ImageResponse |

Now, inside a prompt, we can add messages for different roles. For example, a Prompt instance can accept a list of messages:

public class Prompt {

private final List<Message> messages;

// ...

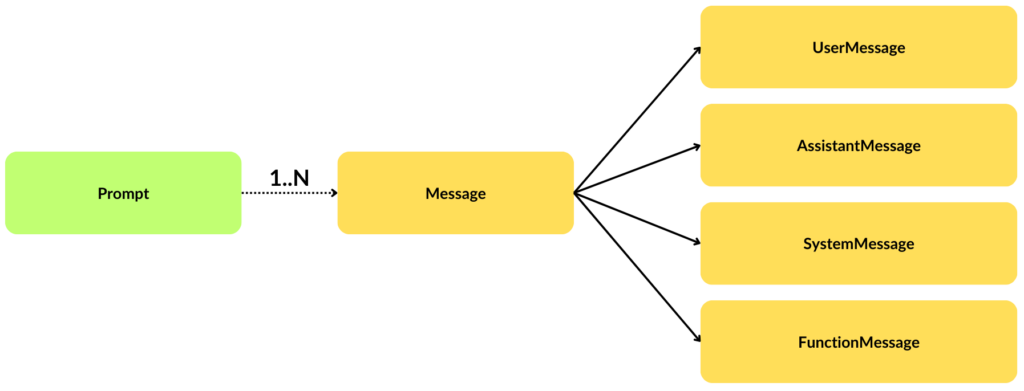

}The Message is an interface that encapsulates the necessary information for representing any type of message during the conversation. For each type of role, we can create the specific message instance (AssistantMessage, ChatMessage, FunctionMessage, SystemMessage, UserMessage) as shown in the following image:

We can create any number of messages (as necessary for conversation) and send them to the Ai model as follows:

// Get with dependency injection

OpenAiChatModel chatModel;

// ...

Message userMessage = new UserMessage("USER: ...");

Message systemMessage = new SystemMessage("SYSTEM: ...");

Prompt prompt = new Prompt(List.of(userMessage, systemMessage));

Generation generation = chatModel.call(prompt).getResult();

System.out.println(generation.getOutput().getContent());3. PromptTemplate API

Prompts or messages are not always simple strings. These can be complex formatted strings after interpolating several parameters in a template string. The org.springframework.ai.chat.prompt.PromptTemplate class is at the heart of the prompt templating process. Spring AI module has taken the help of the 3rd-party library StringTemplate engine, developed by Terence Parr, for constructing and interpolating the prompts.

The PromptTemplate class is further extended by these subclasses, although usage remains the same.

- AssistantPromptTemplate

- FunctionPromptTemplate

- SystemPromptTemplate

The following is a simple example of PromptTemplate class.

PromptTemplate promptTemplate = new PromptTemplate("Tell me about {subject}. Explain if I am {age} years old.");

//Obtain these values from user

String subject = "USA Elections";

int age = 14;

Prompt prompt = promptTemplate.create(Map.of("subject", subject, "age", age));

Generation generation = chatModel

.call(prompt)

.getResult();

System.out.println(prompt.getContents());

System.out.println(generation.getOutput().getContent());The program output:

Tell me about USA Elections. Explain if I am 14 years old.

USA Elections are the process where people in the United States choose who will be their leaders, such as the President, Senators, and Representatives. .....4. Combining Multiple Prompts

When there are multiple roles are involed in the conversaton, we can create a Message for each role and message, as required. Then we can pass all the messages as a List in the Prompt object. The prompt object is sent to the chat model.

//1. Template from user message

String userText = """

Tell me about five most famous tourist spots in {location}.

Write at least a sentence for each spot.

""";

String location = "Dubai(UAE)"; //Get from user

PromptTemplate userPromptTemplate = new PromptTemplate(userText);

Message userMessage = userPromptTemplate.createMessage(Map.of("location", location));

//2. Template for system message

String systemText = """

You are a helpful AI assistant that helps people find information. Your name is {name}

In your first response, greet the user, quick summary of answer and then do not repeat it.

Next, you should reply to the user's request.

Finish with thanking the user for asking question in the end.

""";

SystemPromptTemplate systemPromptTemplate = new SystemPromptTemplate(systemText);

Message systemMessage = systemPromptTemplate.createMessage(Map.of("name", "Alexa"));

//3. Prompt message containing user and system messages

Prompt prompt = new Prompt(List.of(userMessage, systemMessage));

//4. API invocation and result extraction

Generation generation = chatModel.call(prompt).getResult();

System.out.println(generation.getOutput().getContent());5. Injecting Template Strings as Resource

So far we have been defining the template strings in the Java source file. Spring allows to put prompt data in a Resource file and directly inject into a PromptTemplate.

For example, we can define the system prompt in the ‘/resources/prompts/system-message.st’ file as follows:

You are a helpful AI assistant that helps people find information. Your name is {name}

In your first response, greet the user, quick summary of answer and then do not repeat it.

Next, you should reply to the user's request.

Finish with thanking the user for asking question in the end.The refer to this file into the prompt template as follows:

@Value("classpath:/prompts/system-message.st")

private Resource promptSystemMessage;

SystemPromptTemplate systemPromptTemplate = new SystemPromptTemplate(systemText);

Message systemMessage = systemPromptTemplate.createMessage(Map.of("name", "Alexa"));

Prompt prompt = new Prompt(List.of(..., systemMessage));

//...6. Summary

This Spring AI tutorial discussed various classes and interfaces that create the Prompt templating functionality provided by the module. It is highly recommended to play with different classes and observe their outputs to understand how to use them more effectively.

To see what the actual requests and responses look like, you can check out the LangChain4J Structured Output Example. In this example, we have logged the actual request and response to the OpenAI API.

Happy Learning !!

Comments