Spring AI currently supports only OpenAI’s whisper model for multilingual speech recognition as well as speech transcription to JSON or TEXT files. For the latest updates on available models, refer to the linked OpenAI website.

1. Getting Started

As Spring API supports only OpenAI models, we have only OpenAiAudioTranscriptionModel class for creating the transcriptions from audio files. When additional providers for Transcription are implemented, a common AudioTranscriptionModel interface will be extracted.

To work with the audio transcription model, we must include the ‘spring-ai-openai-spring-boot-starter‘ dependency in the project. Refer to the Getting Started with Spring AI to setup the Spring AI BOM to the project dependencies.

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>Spring Boot’s autoconfiguration, apart from other beans for chat, audio, and image models, creates the instance of OpenAiAudioTranscriptionModel class with the following default configuration values. We can change these properties as per requirements.

# API Key is mandatory

spring.ai.openai.api-key=${OPENAI_API_KEY}

# Default Configuration Values

spring.ai.openai.audio.transcription.options.model=whisper-1

spring.ai.openai.audio.transcription.options.response-format=json # json, text, srt, verbose_json or vtt

spring.ai.openai.audio.transcription.options.temperature=0 # sampling temperature, between 0 and 1

spring.ai.openai.audio.transcription.options.timestamp_granularities=segment # segment and word (either or both) The supported output formats are as follows:

json: structured response format.

text: plain text data.

srt: file format for subtitles.

verbose_json: json with additional metadata.

vtt: for displaying timed text tracks (such as subtitles or captions) in web video players.

We can supply some more additional/optional properties to control the model’s output style:

# An optional text to guide the model’s style or continue a previous audio segment.

# The prompt should match the audio language.

spring.ai.openai.audio.transcription.options.prompt={prompt}

# The language of the input audio in ISO-639-1 format

spring.ai.openai.audio.transcription.options.language={language}Next, we can inject the OpenAiAudioTranscriptionModel into any Spring-managed bean.

@RestController

class TranscriptionController {

private final OpenAiAudioTranscriptionModel transcriptionModel;

TranscriptionController(OpenAiAudioTranscriptionModel transcriptionModel) {

this.transcriptionModel = transcriptionModel;

}

// handler methods

}2. Manual Configuration

To create the OpenAiAudioTranscriptionModel bean with default configurations, we only need the API key:

var openAiAudioApi = new OpenAiAudioApi(System.getenv("OPENAI_API_KEY"));

var transcriptionModel = new OpenAiAudioTranscriptionModel(openAiAudioApi);If we wish to change the default with custom parameters, we can utilize OpenAiAudioSpeechOptions class as follows:

OpenAiAudioTranscriptionOptions options = OpenAiAudioTranscriptionOptions.builder()

.withLanguage("en")

.withPrompt("Create transcription for this audio file.")

.withTemperature(0f)

.withResponseFormat(TranscriptResponseFormat.TEXT)

.build();

var openAiAudioApi = new OpenAiAudioApi(System.getenv("OPENAI_API_KEY"));

var transcriptionModel = new OpenAiAudioTranscriptionModel(openAiAudioApi, options);3. Speech to Text Transcription Example

Once we have the OpenAiAudioTranscriptionModel bean initialized, we can use its call() method to supply the audio file that needs to be transcribed to text.

@Value("classpath:speech.mp3")

Resource audioFile;

AudioTranscriptionResponse response = transcriptionModel.call(new AudioTranscriptionPrompt(audioFile));

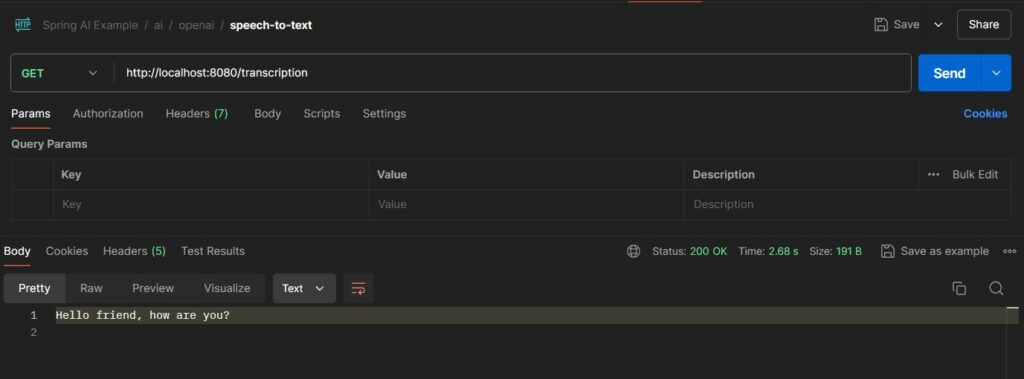

String text = response.getResult().getOutput();For example, take the following controller method:

@RestController

class SpeechController {

private final SpeechModel speechModel;

SpeechController(SpeechModel speechModel) {

this.speechModel = speechModel;

}

@GetMapping("/transcription")

String speech(@Value("classpath:speech.mp3") Resource audioFile) {

return transcriptionModel.call(new AudioTranscriptionPrompt(audioFile))

.getResult()

.getOutput();

}

}We have used the speech.mp3 audio file for the demo purpose. The transcription response can be seen as API output.

4. Summary

In this Spring AI speech-to-text tutorial, we learned the following:

- Currently, the Spring AI module only supports OpenAI’s whisper model.

- The primary interface for generating transcriptions is OpenAiAudioTranscriptionModel.

- The OpenAiAudioTranscriptionModel bean (with default configuration) is available to use as soon as we add the spring-ai-openai-spring-boot-starter dependency to the project.

- We can control the default configuration values using the properties file.

- For manual configuration options, we can utilize the OpenAiAudioTranscriptionOptions builder class.

Happy Learning !!

Comments